I have a profiles table in SQL with around 50 columns, and only 244 rows.

I have created a view with only 2 columns, ID and content and in content I concatenated all data from other columns in a format like this:

FirstName: John. LastName: Smith. Age: 70, Likes: Gardening, Painting. Dislikes: Soccer.

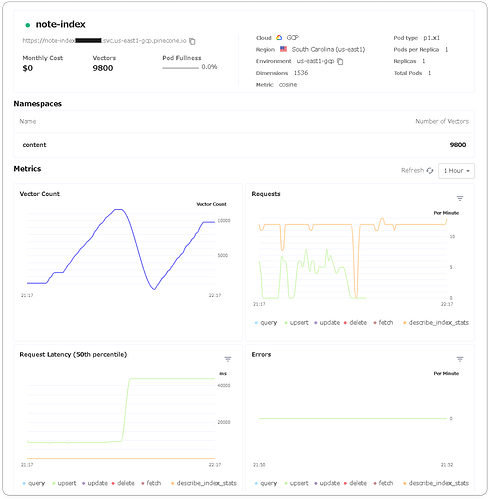

Then I created the following code to index all contents from the view into pinecone, and it works so far. However I noticed something strange.

- There are over 2000 vectors and still not finished, the first iterations were really fast, but now each iteration is taking over 18 seconds to finish and it says it will take over 40 minutes to finish upserting.

What am I doing wrong? or is it normal?

pinecone.init(

api_key=PINECONE_API_KEY, # find at app.pinecone.io

environment=PINECONE_ENV # next to api key in console

)

import streamlit as st

st.title('Work in progress')

embed = OpenAIEmbeddings(deployment=OPENAI_EMBEDDING_DEPLOYMENT_NAME, model=OPENAI_EMBEDDING_MODEL_NAME, chunk_size=1)

cnxn = pyodbc.connect('DRIVER={ODBC Driver 17 for SQL Server};SERVER='+DATABASE_SERVER+'.database.windows.net;DATABASE='+DATABASE_DB+';UID='+DATABASE_USERNAME+';PWD='+ DATABASE_PASSWORD)

query = "SELECT * from views.vwprofiles2;"

df = pd.read_sql(query, cnxn)

index = pinecone.Index("default")

batch_limit = 100

texts = []

metadatas = []

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=400,

chunk_overlap=20,

length_function=tiktoken_len,

separators=["\n\n", "\n", " ", ""]

)

for _, record in stqdm(df.iterrows(), total=len(df)):

# First get metadata fields for this record

metadata = {

'IdentityId': str(record['IdentityId'])

}

# Now we create chunks from the record text

record_texts = text_splitter.split_text(record['content'])

# Create individual metadata dicts for each chunk

record_metadatas = [{

"chunk": j, "text": text, **metadata

} for j, text in enumerate(record_texts)]

# Append these to the current batches

texts.extend(record_texts)

metadatas.extend(record_metadatas)

# If we have reached the batch_limit, we can add texts

if len(texts) >= batch_limit:

ids = [str(uuid4()) for _ in range(len(texts))]

embeds = embed.embed_documents(texts)

index.upsert(vectors=zip(ids, embeds, metadatas))

texts = []

metadatas = []

if len(texts) > 0:

ids = [str(uuid4()) for _ in range(len(texts))]

embeds = embed.embed_documents(texts)

index.upsert(vectors=zip(ids, embeds, metadatas))